The Hidden Threats in Software Development – How AI Is Quietly Changing the Rules of Development

AI (artificial intelligence) tools are changing how we build software. They help us write code faster, test it more easily, and finish projects sooner—but they also introduce new AI threats in software development that many teams overlook.

Also behind all this progress is a growing problem: AI can also create serious security risks—and many developers don’t even realize it.

Imagine you're coding late at night to meet a deadline. You ask an AI helper (like ChatGPT) to give you some code. It responds with a working example and recommends a third-party software package (a library).

You install it, trusting the AI. But the next day, your system is hacked.

Why? That package never existed. The AI made it up—a mistake called a "hallucination." A hacker noticed the fake name, created a real package with that name, and filled it with dangerous code.

So, is artificial intelligence a threat to software development? And more importantly, are developers and organizations truly prepared?

The Research: AI as a Source of Software Vulnerabilities

The UTSA research team, led by doctoral student Joe Spracklen and supervised by Dr. Murtuza Jadliwala, investigated the security implications of using AI in software creation. Their findings, accepted for presentation at USENIX Security Symposium 2025, expose a rarely discussed but critical flaw in how LLMs work: package hallucination.

What is a package hallucination?

Imagine asking an AI to write a few lines of code—and it gives you a clean solution… except one of the libraries doesn’t exist. That’s package hallucination.

It’s a phenomenon where an AI model like ChatGPT recommends a software package (library or module) that doesn’t actually exist. This might seem like a minor bug—but in practice, it introduces an AI threat to cybersecurity with potentially wide-reaching implications.

- A software library that doesn’t exist

- A fake command or setting

- Incorrect information that looks real

This mistake is called a hallucination. When the AI suggests a fake package name, it opens the door for hackers to create malicious software using that name.

How AI Threatens Developers Through Package Hallucination

Let’s walk through how this threat actually plays out in real-world coding scenarios:

- A developer asks an AI to generate code for a common task.

- The AI returns functioning code—but includes a reference to a non-existent package.

- A hacker monitoring AI behavior notices these hallucinated names.

- The hacker quickly registers a malicious package with that exact name on a popular package manager (e.g., npm, PyPI).

- Next time a developer runs the code without verifying the package, the malicious code is downloaded and executed, giving the attacker access to the user’s system.

This tactic is surprisingly effective because it doesn’t rely on traditional exploitation. Instead, it weaponizes developer trust in AI and the open-source ecosystem.

Real Research, Real Risk

The UTSA (University of Texas at San Antonio) team generated a massive dataset of 2.23 million code samples using multiple AI tools, primarily targeting Python and JavaScript.

Here’s what they found:

- 440,445 code samples contained hallucinated (non-existent) packages.

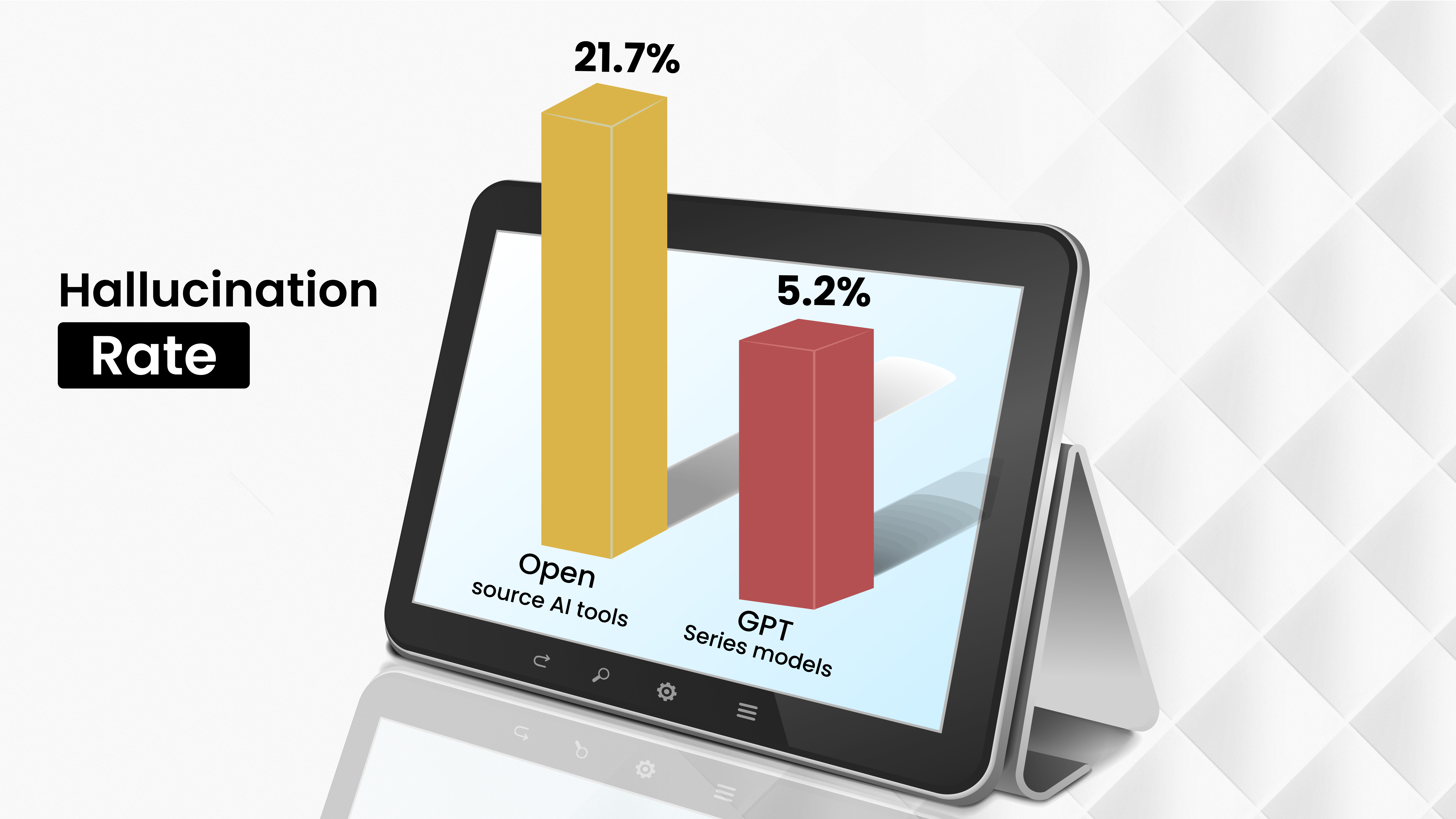

- GPT-series models had a 5.2% hallucination rate.

- Open-source AI tools fared worse, with a 21.7% hallucination rate.

- Python was more resistant to package hallucination than JavaScript.

- Up to 97% of developers now use AI in some form during development.

- Around 30% of code written today is generated by AI tools.

These numbers highlight a major blind spot: developers are often unaware that the very tools meant to save them time could expose them to risk.

These numbers highlight a major blind spot: developers are often unaware that the very tools meant to save them time could expose them to risk.

How Hackers Use AI Mistakes

Here’s how a fake package becomes a real threat:

- A developer asks AI to generate code

- The AI suggests a fake package

- A hacker sees that name and quickly creates a real, dangerous package

- The developer installs it without checking

- The system is now infected

The AI isn’t trying to be harmful—it just made a mistake. But hackers take advantage of it.

AI Threats in Software Development: Not Just Hypothetical

In the past, hackers tricked developers by using package names that looked like real ones (like request vs requests). That was called a typosquatting attack.

Now it’s worse:

AI can invent completely new package names. Hackers don’t need to copy real ones—they just use the fake names suggested by AI.

This tactic is simple, scalable, and hard to detect. That’s what makes it one of the most concerning AI threats in software development today.

Why Is Artificial Intelligence a Threat in This Context?

Let’s address the question head-on:

Is artificial intelligence a threat to cybersecurity and software development?

The short answer: Yes, when used irresponsibly or without validation.

The lack of malicious intent in AI systems combines with human trust and system errors to generate exploitable gaps. As AI gets better, users become less skeptical. This creates a dangerous feedback loop:

- LLMs gain trust.

- Errors go unchecked.

- Attackers take advantage.

Developers are trained to trust code suggestions, especially when they seem syntactically correct. But with LLMs (AI’s), you’re not just copying from Stack Overflow anymore—you’re potentially importing fabricated or insecure elements into your project.

How Developers Can Stay Safe from AI Threats in Software Development

If you’re a developer, team lead, or cybersecurity expert, here are some ways to minimize your exposure to AI-related software threats:

1. Cross-Check Packages Before Use

2. Use AI Tools with Guardrails

- Some AI tools (like ChatGPT) make fewer mistakes

- Use platforms with safety checks or source links

3. Educate Your Team

- Make sure your team knows about fake packages

- Add a quick check step before installing any new package

4. Advocate for Safer LLMs

- Support AI companies that share how their tools work

- Ask for transparency and better safety features

Final Takeaway: Smarter AI Use Is Key to Tackling AI Threats in Software Development

AI is not inherently bad. It’s a tool—a powerful one. But like any tool, it can become dangerous if used blindly.

The biggest threat AI poses to Custom Software Development is our overconfidence in it.

By understanding vulnerabilities like package hallucination, we can use AI more intelligently, responsibly, and securely. The industry must now evolve—not to avoid AI, but to build safer practices around it.

In the age of generative code, trust must be earned—not assumed.

Have You Seen AI Hallucinate Code?

If you've encountered an AI recommending a suspicious or non-existent package, share your experience in the comments. Let's build a smarter, more secure dev community—together.